Another little aide-memoire - I want to find all files in a directory containing a specific string that were created on a specific date and copy them to another directory.

Using Powershell it's quite easy, with just a little wrinkle in the copy-item syntax:

PS C:\SourceFolder> get-childitem | where-object { $_.CreationTime -ge "10/29/2015" -and $_.CreationTime -le "10/30/2015" } | select-string -pattern "80029" | group path | select name | % { $_.Name | copy-item -destination C:\temp\TargetFolder }

Friday, October 30, 2015

Tuesday, September 22, 2015

HTML5, AngularJS and hosting on AWS S3 - Oh my!

So I've not done a big "how to" post in a l-o-n-g while, so I thought it'd be useful to document the process of moving from an effectively static ASP.Net MVC web site to an actually static web site that can be hosted directly from an S3 bucket.

Why? Well, my "toy" sites have no real dynamic content, so why maintain a micro-VM on Azure just to host them?

So this will be a step-by-step guide - partly for my own recollection, and also because finding some of the incantations needed to publish a web site successfully to AWS S3 took a fair bit of effort.

As I'm going to try and maintain these sites 'properly', I'm going to put the source code into GitHub.

then a whole AngularJS web site scaffolded with one command!

I've got to admit I really like the workflow that's enabled by using VSCode and grunt file watching - a quick grunt serve and then just edit and save. With a two monitor setup, this is an absolute dream.

Capturing small changes as individual git commits feels "just right" too.

This is where things get interesting.

Setting up a new bucket in S3 is easy - name the bucket after the web site url (mywebsite.co.uk in this example).

We then need to configure a grunt task to publish to that bucket - Rob Morgan has a very good walkthrough here of how to do this using the grunt-aws package.

The biggest headache I found in this whole process was setting AWS permissions up correctly. I don't really want to push via my super-user account, and if I ever get a build server for all this working, I'd rather have a single user per web site with VERY limited permissions to push changes to AWS S3.

Actually, Amazon recommend rotating keys on a regular basis, so you'll be doing that anyway - but it's still not what you want to be doing every morning before you start.

With all that set up (phew!), then deploying the site to AWS S3 is a one-liner:

Click "Save", and your content is served from the default endpoint.

Now's a good time to check that your web app runs nicely by just hitting that endpoint in a browser - and get a warm fuzzy feeling.

The last thing to do is to switch over the DNS for the target domain so that www.mywebsite.co.uk is a CNAME for the AWS S3 endpoint.

You can if you want set up AWS CloudFront delivery as well, but that's beyond the scope of this how-to.

Why? Well, my "toy" sites have no real dynamic content, so why maintain a micro-VM on Azure just to host them?

So this will be a step-by-step guide - partly for my own recollection, and also because finding some of the incantations needed to publish a web site successfully to AWS S3 took a fair bit of effort.

Step 0 - Setup

As I'm going to try and maintain these sites 'properly', I'm going to put the source code into GitHub.

joel$ cd Projects/

joel$ mkdir mywebsite.co.uk

joel$ cd mywebsite.co.uk

joel$ git init

Initialized empty Git repository in /Users/joel/Projects/mywebsite.co.uk/.git/So, I set up a new repository on GitHub with an Apache license and a default README.md file, and connected by empty project folder to that:

joel$ git remote add origin https://github.com/Me/mywebsite.co.uk

joel$ git pull origin master

From https://github.com/Me/mywebsite.co.uk * branch master -> FETCH_HEAD

joel$ ls

LICENSE README.md

Step 1 - Scaffolding

Scaffolding a sensibly structured HTML5/AngularJS site is amazingly easy using Yeoman. A quick check first that we're good to go...joel$ yo --version && bower --version && grunt --version

1.3.2

1.3.12

grunt-cli v0.1.13

then a whole AngularJS web site scaffolded with one command!

joel$ yo angular

...

Commit and push to GitHub gives me a baseline against which I can start working on the site

joel$ git add .

joel$ git commit -m "Initial scaffolding"

[master 995f8ba] Initial scaffolding

26 files changed, 1640 insertions(+)

...

joel$ git push origin master

...

To https://github.com/Me/mywebsite.co.uk.git

f3525d2..995f8ba master -> master

Step 2 - Working on the site

I've got to admit I really like the workflow that's enabled by using VSCode and grunt file watching - a quick grunt serve and then just edit and save. With a two monitor setup, this is an absolute dream.

Capturing small changes as individual git commits feels "just right" too.

Step 3 - Setting up publishing to AWS S3

This is where things get interesting.

Setting up a new bucket in S3 is easy - name the bucket after the web site url (mywebsite.co.uk in this example).

We then need to configure a grunt task to publish to that bucket - Rob Morgan has a very good walkthrough here of how to do this using the grunt-aws package.

joel$ npm install grunt-aws-s3 --save-dev...

And then we add some lines to the Gruntfile.js file:

grunt.loadNpmTasks('grunt-aws-s3');

// Configurable paths for the application

var appConfig = {

app: require('./bower.json').appPath || 'app',

dist: 'dist',

s3AccessKey: grunt.option('s3AccessKey') || '',

s3SecretAccessKey: grunt.option('s3SecretAccessKey') || '',

s3Bucket: grunt.option('s3Bucket') || 'mywebsite.co.uk',

};

grunt.initConfig({

...

aws_s3: {

options: {

accessKeyId: appConfig.s3AccessKey,

secretAccessKey: appConfig.s3SecretAccessKey,

bucket: appConfig.s3Bucket,

region: 'eu-west-1',

},

production: {

files: [

{ expand: true,

dest: '.',

cwd: 'dist/',

src: ['**'],

differential: true }

]

}

}

});

grunt.registerTask('deploy', ['build', 'aws_s3']);

Notice that my AWS secrets are injected via grunt command line parameters - so no chance of committing them into GitHub!

Step 4 - Configuring AWS permissions

The biggest headache I found in this whole process was setting AWS permissions up correctly. I don't really want to push via my super-user account, and if I ever get a build server for all this working, I'd rather have a single user per web site with VERY limited permissions to push changes to AWS S3.

Create a deployment user

In AWS IAM Management, I created a new user called mywebsite.deploy, with an associated Access Key / Secret pair that I downloaded and saved somewhere secure.

There's no way to get back an access key, so be careful not to forget this step, or you'll have to regenerate the key pair!

There's no way to get back an access key, so be careful not to forget this step, or you'll have to regenerate the key pair!

Actually, Amazon recommend rotating keys on a regular basis, so you'll be doing that anyway - but it's still not what you want to be doing every morning before you start.

Create a deployment group

Again in AWS IAM Management, I created a new group called mywebsite_deployment and added the mywebsite.deploy user to that group.

Next up - permissions.

Grant permissions on the bucket to the group

To do this, we have to add an "Inline policy" to the mywebsite_deploy group to grant basic access to any users in the group.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:*",

"Resource": "arn:aws:s3:::mywebsite.co.uk"

}

]

}

Grant restricted rights on the bucket to the deployment user

We don't want the mywebsite.deploy user to be able to do anything to the bucket (such as change permissions), so we restrict their access rights to the bucket contents by applying a policy to the bucket itself

{ "Id": "Policy1438599268262", "Version": "2012-10-17", "Statement": [ { "Sid": "Stmt1438599259521", "Action": [ "s3:DeleteObject", "s3:GetObject", "s3:GetObjectAcl", "s3:PutObject", "s3:PutObjectAcl" ], "Effect": "Allow", "Resource": "arn:aws:s3:::mywebsite.co.uk/*", "Principal": { "AWS": [ "arn:aws:iam::765146773618:user/mywebsite.deploy" ] } } ]}

Step 5 - Deploying to AWS

With all that set up (phew!), then deploying the site to AWS S3 is a one-liner:

joel$ grunt deploy --s3AccessKey=<<your access key>> --s3SecretAccessKey=<<your secret>>

...

16/16 objects uploaded to bucket mywebsite.co.uk/

Done, without errors.

Step 6 - Set up Static Website Hosting

In the AWS S3 console, select the bucket and click on "Properties" to open the properties pane for the bucket.

Open the "Static Web Site Hosting" section and it's easy to enable hosting just by checking the option. Enter index.html as the default document.

Click "Save", and your content is served from the default endpoint.

Now's a good time to check that your web app runs nicely by just hitting that endpoint in a browser - and get a warm fuzzy feeling.

Step 7 - Domain setup

The last thing to do is to switch over the DNS for the target domain so that www.mywebsite.co.uk is a CNAME for the AWS S3 endpoint.

You can if you want set up AWS CloudFront delivery as well, but that's beyond the scope of this how-to.

Wednesday, July 29, 2015

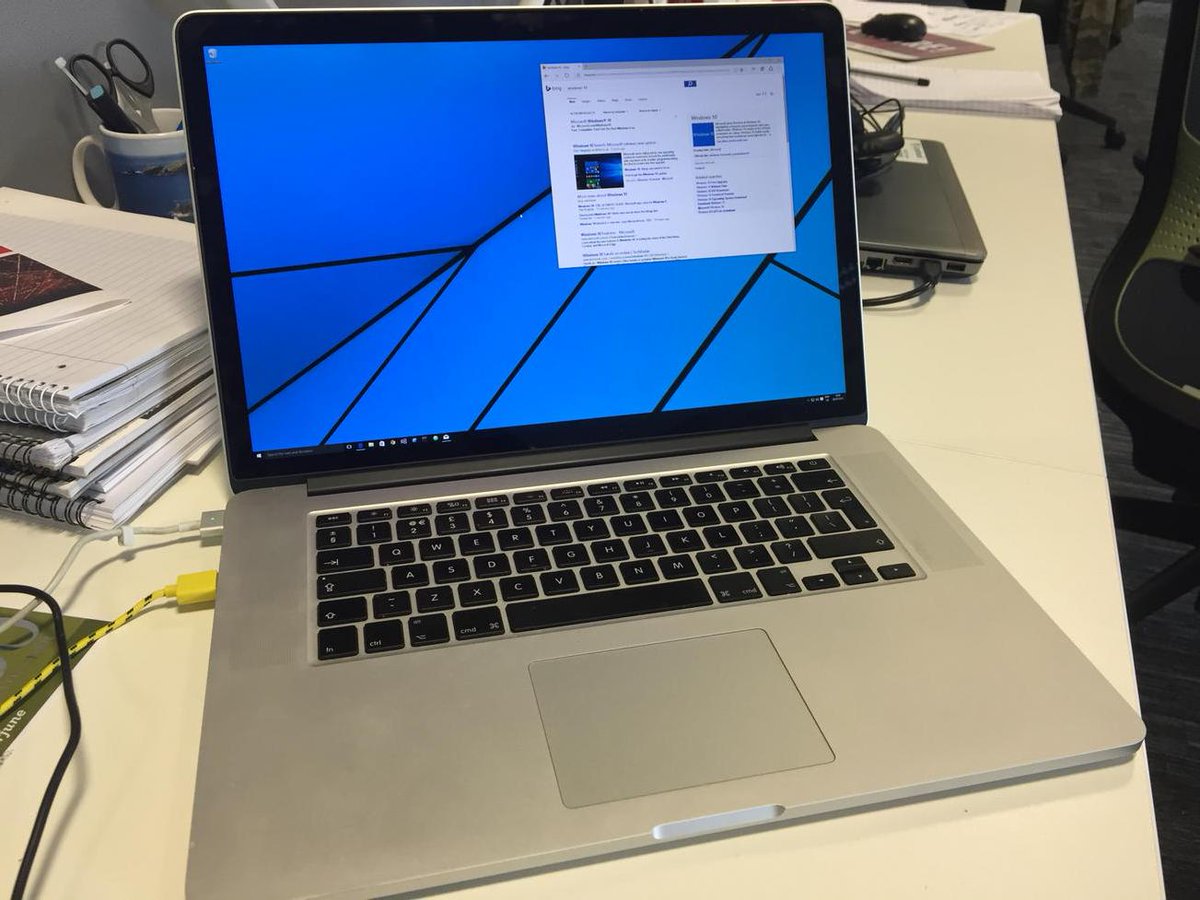

Upgrading a Windows 8.1 VM to Windows 10

So now that Windows 10 has been released, I thought it was time to do more than just "play" with the Technical Preview.

Myvictim candidate was the Windows 8.1 VM I have on my Macbook Pro for dev and demo work - if it all went pear shaped then I could just blow the VM away and start again.

This has been bugging me for a while, as I've been expecting that to appear automagically over the past month. But it's been stoicly absent. Turns out that if you've got a domain joined PC you'll get the GWX app with the relevant KB 3035583 update, but it'll not work.

So the only way forward was to download the ISO and run the update from that.

Typically, I had less than 1GB free - until Disk Cleanup showed 5GB of Temporary files and 4Gb of old installers - better than trying to change the size of the physical BootCamp partition my VM was on.

About as painless as any other Windows install / upgrade - the install worked fine, letting me sign back in to complete the process... and then

It was all going far too well - there had to be a hitch...

It seems several people have had this problem when a PC (virtual or otherwise) boots for the first time into Windows 10. There's a thread here about it - but the upshot is that you just need a reboot!

In VMWare Fusion I just triggered that from the Virtual Machine menu and I was back... but only at 1924x768 resolution.

There wasn't anything I could find on the web indicating whether the Bootcamp and VMWare Tools drivers would work with Windows 10 - so I crossed my fingers and gave it a go... and it worked!

All that was left was to tweet that I'd succeeded...

My

Issue 1 - I didn't have the "Get Windows 10" icon on my start bar

This has been bugging me for a while, as I've been expecting that to appear automagically over the past month. But it's been stoicly absent. Turns out that if you've got a domain joined PC you'll get the GWX app with the relevant KB 3035583 update, but it'll not work.

So the only way forward was to download the ISO and run the update from that.

Issue 2 - You need 9.5GB free to install Windows 10

Typically, I had less than 1GB free - until Disk Cleanup showed 5GB of Temporary files and 4Gb of old installers - better than trying to change the size of the physical BootCamp partition my VM was on.

Installing - was actually pretty easy

About as painless as any other Windows install / upgrade - the install worked fine, letting me sign back in to complete the process... and then

OMG - Black Screen!

It was all going far too well - there had to be a hitch...

| rammesses Slightly worrying that my #Windows10 upgrade has been stuck showing a black screen for the last 20 minutes. Cursor moves, so still #Hopeful 29/07/2015 14:37 |

It seems several people have had this problem when a PC (virtual or otherwise) boots for the first time into Windows 10. There's a thread here about it - but the upshot is that you just need a reboot!

In VMWare Fusion I just triggered that from the Virtual Machine menu and I was back... but only at 1924x768 resolution.

Drivers!

There wasn't anything I could find on the web indicating whether the Bootcamp and VMWare Tools drivers would work with Windows 10 - so I crossed my fingers and gave it a go... and it worked!

All that was left was to tweet that I'd succeeded...

rammesses#Windows10 running under #VMware Fusion on a MBP in #Retina resolution - #Done http://t.co/30Oeh2lfWM29/07/2015 16:11 |

Tuesday, June 02, 2015

Tuesday Quickie - Transaction Manager Errors are not always what they seem

This one bit me today for the second time, so I thought I'd blog about the problem - more than as a reminder to myself than for any other reason.

On one of our environments, database changes weren't being saved with the following cryptic error message:

On one of our environments, database changes weren't being saved with the following cryptic error message:

Communication with the underlying transaction manager has failed.-- COMException - The MSDTC transaction manager was unable to pull the transaction from the source transaction manager due to communication problems. Possible causes are: a firewall is present and it doesn't have an exception for the MSDTC process, the two machines cannot find each other by their NetBIOS names, or the support for network transactions is not enabled for one of the two transaction managers.

The actual cause?

A single rogue space in the connection string.

Go figure!

Wednesday, May 20, 2015

Tuesday Quickie - Suppressing SignalR in the Developer Console

This quickie came from a conversation Bart Read and I had on Twitter about how hard all the traffic SignalR generates makes using the Network tab in the Chrome Developer Tools.

In the end, I had a brainwave and found a simple solution - with a little digging and experimentation.

All you need to do is click on the filter icon on the Network tab's toolbar and enter the following magic incantation

This pretty much kills all the SignalR traffic and lets you get back to debugging your own code.

In the end, I had a brainwave and found a simple solution - with a little digging and experimentation.

All you need to do is click on the filter icon on the Network tab's toolbar and enter the following magic incantation

-transport -negotiate

This pretty much kills all the SignalR traffic and lets you get back to debugging your own code.

Friday, February 27, 2015

Friday Quickie - dumping parameters from a TFS build definition

So here's the scenario - Ops have changed the TFS build infrastructure underneath you and the build definitions for an "older" project aren't working.

You open the build definition in Visual Explorer, and lo and behold, there's a warning triangle on the Process tab.

Opening that, and you find that the one of the properties (in this case a deployment script) is now empty and showing an error circle.

The problem is that because it's invalid, the editor has cleared the property - even opening the dialog doesn't help - it's all gone.

So how do you find out what the property WAS so you can fix it?

Well, first, close the build definition WITHOUT SAVING IT!

Next, fire up a Visual Studio Command Prompt and CD to the folder that's mapped to the root of the source code in TFS.

What you need is command line tfpt.exe tool from TF Power Tools (you had that installed already, didn't you?). This has a handy BuildDefinition /dump option that will show you what's in the build definition - regardless that it's invalid.

You can now open the text file in notepad and see what the property WAS - job done.

You open the build definition in Visual Explorer, and lo and behold, there's a warning triangle on the Process tab.

Opening that, and you find that the one of the properties (in this case a deployment script) is now empty and showing an error circle.

The problem is that because it's invalid, the editor has cleared the property - even opening the dialog doesn't help - it's all gone.

So how do you find out what the property WAS so you can fix it?

Well, first, close the build definition WITHOUT SAVING IT!

Next, fire up a Visual Studio Command Prompt and CD to the folder that's mapped to the root of the source code in TFS.

What you need is command line tfpt.exe tool from TF Power Tools (you had that installed already, didn't you?). This has a handy BuildDefinition /dump option that will show you what's in the build definition - regardless that it's invalid.

You can now open the text file in notepad and see what the property WAS - job done.

Subscribe to:

Comments (Atom)